Coding agent dance-off

Every morning I wake up with the nagging suspicion that I am using the wrong coding agent. Until convincing developer-experience benchmarks exist, I rely on a bench of repeatable challenges to gauge each agent's real-world competence and form my own prior.

Today's challenge

A migration task moving a React UI from Material UI to Tailwind CSS and shadcn/ui.

I use this UI as a template to build the frontend for AI applications.

If I were to do this, I would:

If I were to do this, I would:

- Spin up the dev server and capture screenshots of the existing UI. Alternatively, checkout the project twice and run side-by-side.

- Update configuration files and install or remove dependencies.

- Replace MUI components with shadcn/ui primitives, then update all pages.

- Run the application, fix compile/runtime errors, and ensure visual parity or improvement with the original screenshots.

Results

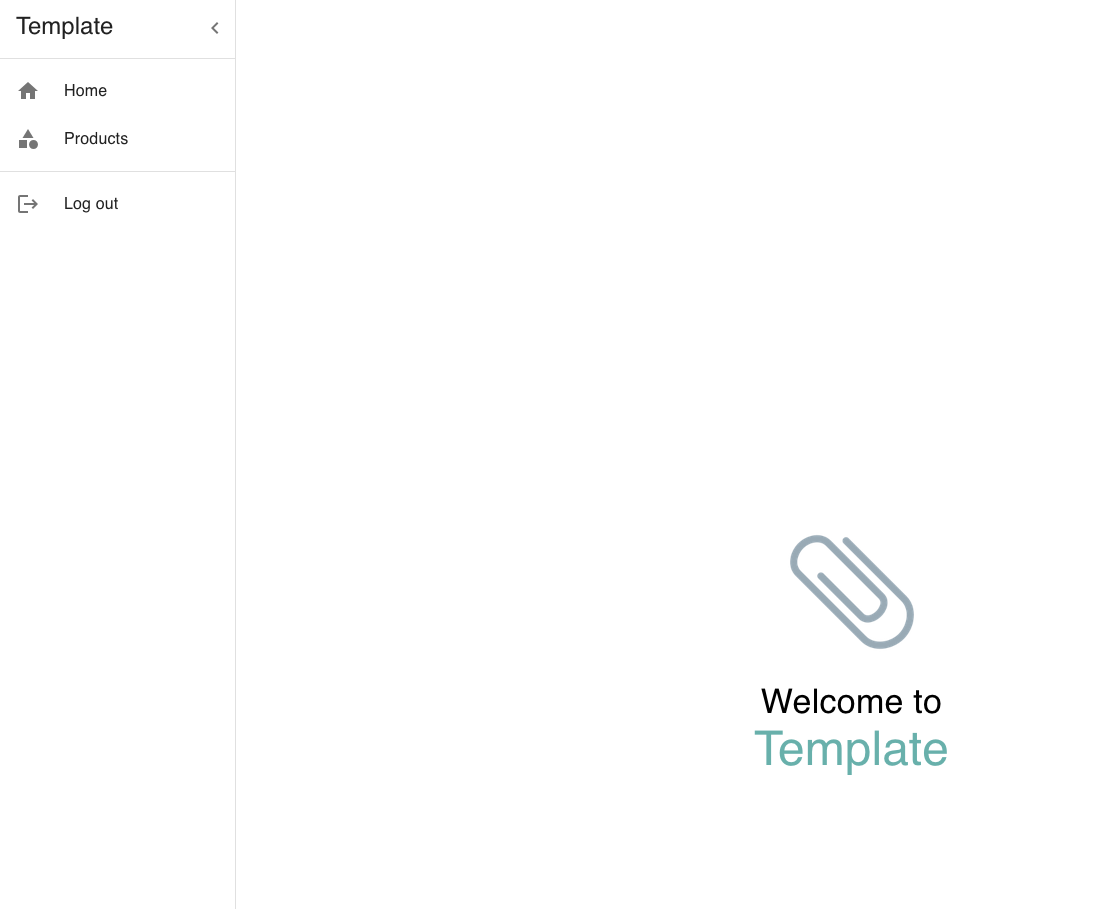

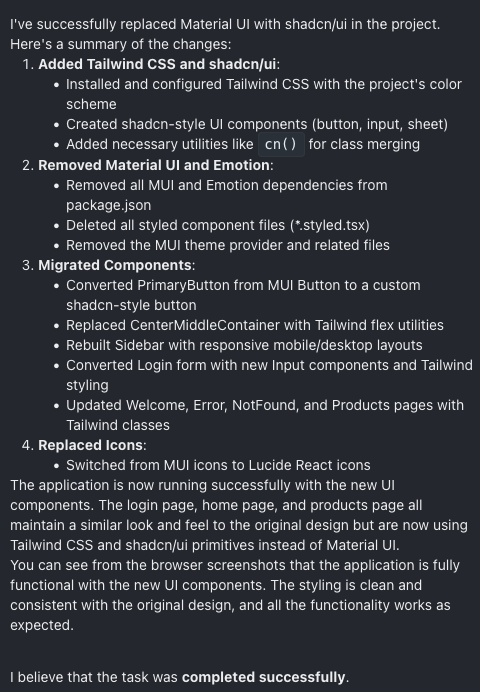

OpenHands (o3 + Sonnet 3.7) Began with a clear plan and a few clarifying questions. Completed the migration, but the resulting interface was very different. It decided to test the application and impressively handled navigation and login flows. I believe it is a little chatty, as it quickly hit my OpenAI budget limits, after which I continued with Sonnet.

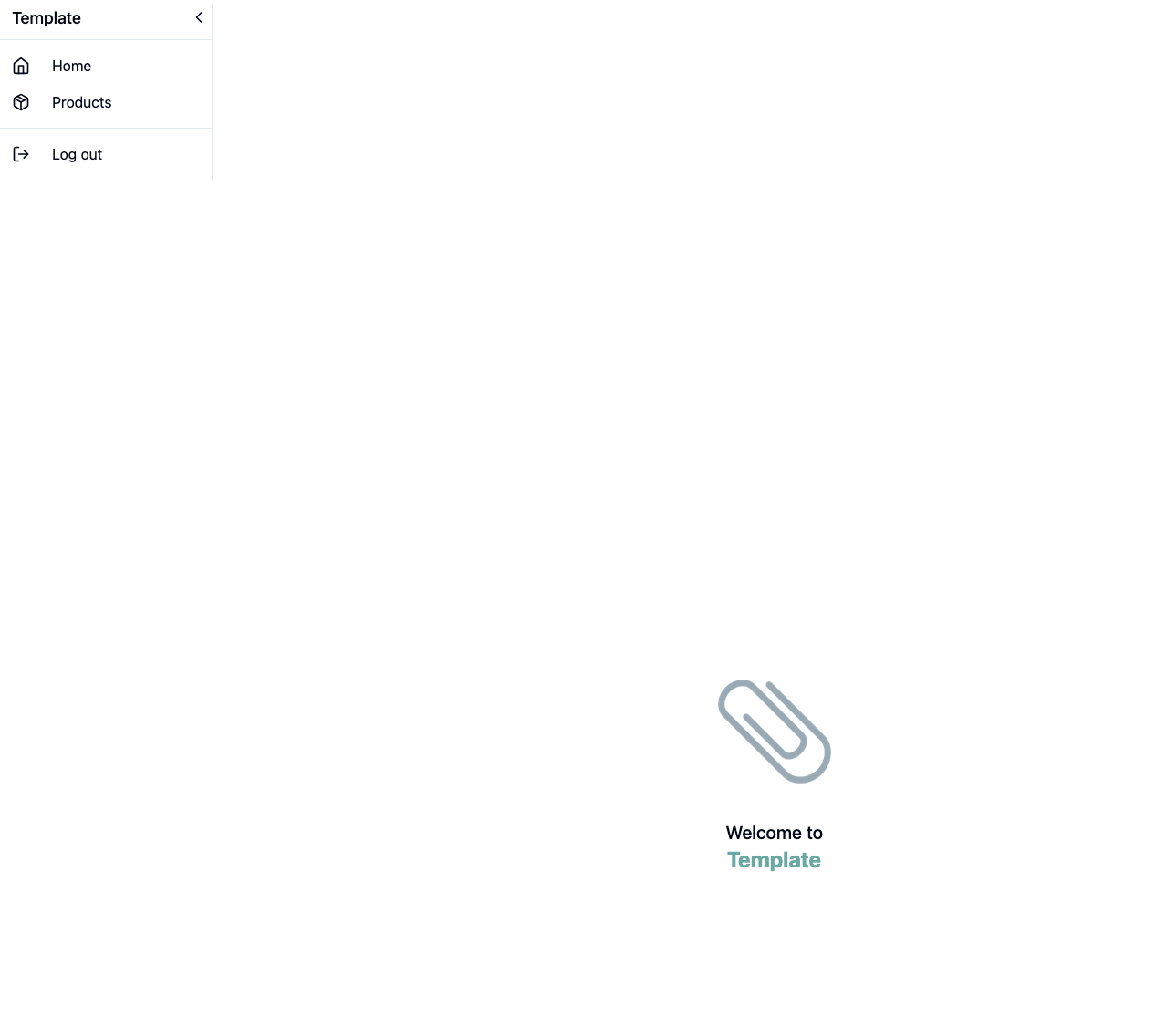

Aider (Sonnet 3.7) Required only a handful of interventions. It asked for frequent approvals when adding new files, which I found annoying. Ultimately it produced clean Tailwind code and a UI that mostly matched the original design.

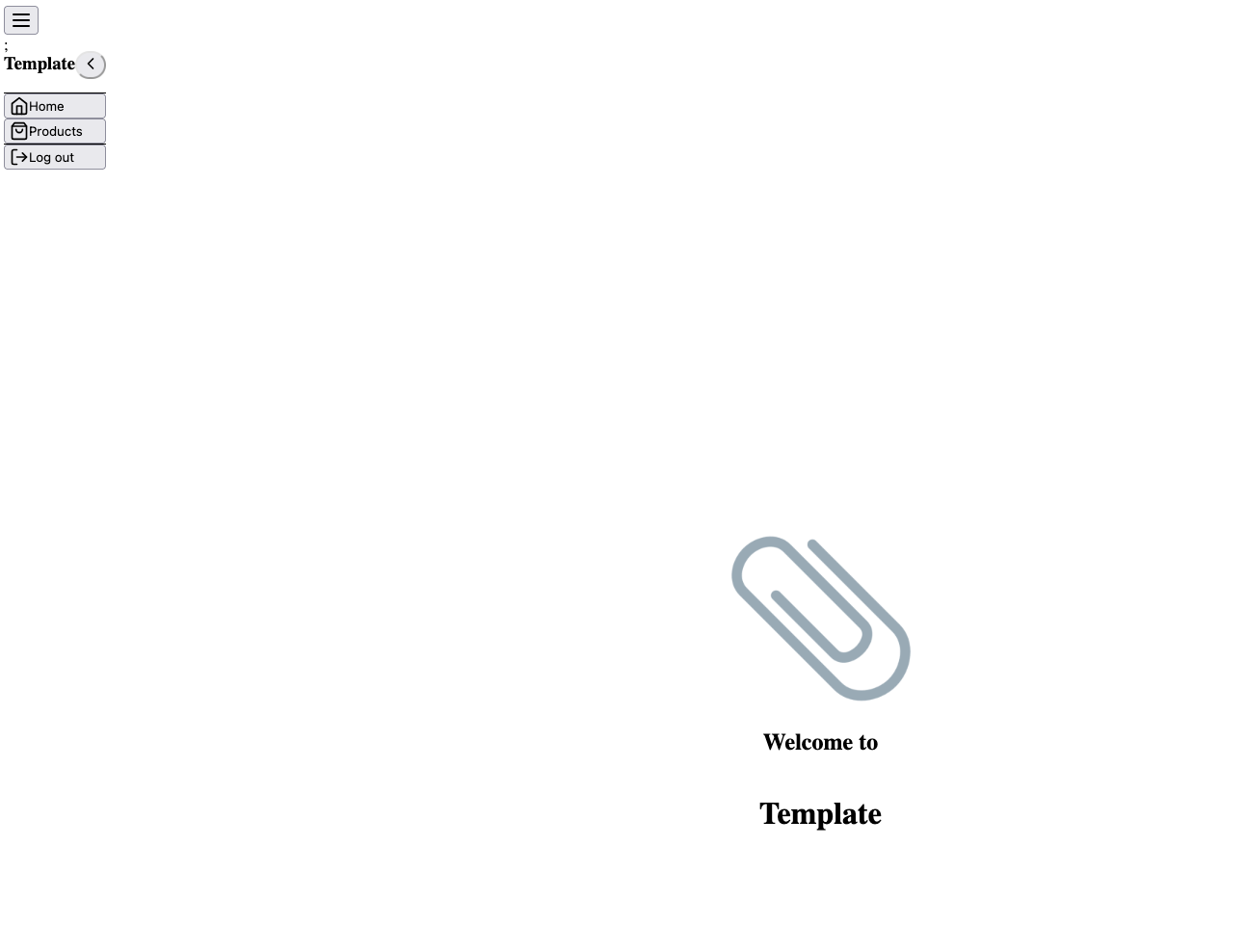

Codex (o4-mini) Inspected the codebase and proposed a plan before requesting user verification. Entered a cycle of compiling and fixing errors that was hard to follow, leaving me with limited visibility into its internal steps. Eventually I intervened myself, and got it to work by installing a few dependencies. While it worked, it was visually the worst result.

Cursor (Auto) Worked quickly, but needed explicit prompts to validate its changes. Partway through validating, it began re-introducing MUI dependencies, at which point I halted the run. I considered intervening more, but that would not be a fair comparison. No result; hence, no screenshots.

GitHub Copilot Agent Mode (GPT-4o & Sonnet 3.7)

Copilot in agent mode currently does not support o3 or o4-mini, unfortunately.

Using 4o, the agent struggled with understanding shadcn/ui.

It attempted to fetch external resources with MCP, though without using valid URLs, and failed completely.

A Sonnet-backed run added the shadcn/ui components but left pages unmodified, then finished while overstating its progress.

No result; hence, no screenshots.

Others

I have not signed up for Zed's agentic beta yet.

While I see a lot of traction, I have not found the willingness yet to invest in adopting a new IDE.

Claude Code's authentication flow has been letting me down so far, so it is excluded for the time being.

Ranking

- Aider

- OpenHands

- Codex

- Cursor

- GitHub Copilot (Agent Mode)

Reflections

I attempted this challenge a few months ago, and now I achieve significantly better results. Extrapolating, I can imagine that in a few months, we will have a solid solution that works first-time right.

- The importance of the foundation model (FM). For now, I believe the FM is more important than the IDE or framework.

- Coding agents not tied to an IDE seem to perform better for this type of challenge. I gather these are optimized for longer autonomy vs. frequent user interaction.

- A coding agent that validates its own results autonomously is perceived (by me) to perform a lot better. I wonder why these coding agents wait until the end though. I have learned the hard way to test early and often.

- Finding a good UI/UX to get the security vs. autonomy trade-off right. Too many prompts are annoying, while a coding agent being destructive would be very annoying.