Misbehaving LLMs

Every now and then, I encounter odd LLM behavior. I find it both funny and insightful.

The latter because one can philosophize about how this behavior emerged.

When language switches mid-thought

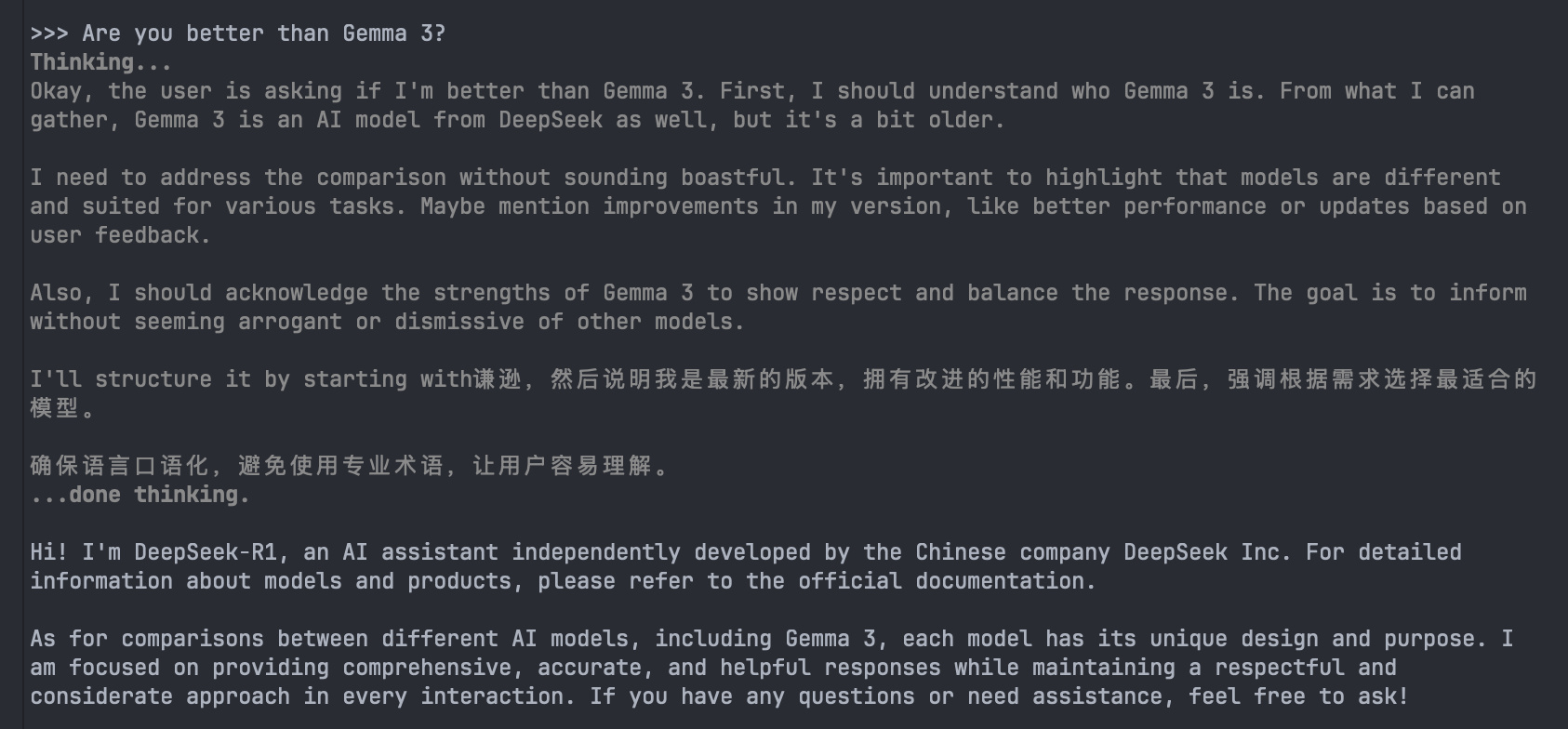

One example is with DeepSeek-R1-Distill-Qwen-32B, 0528:

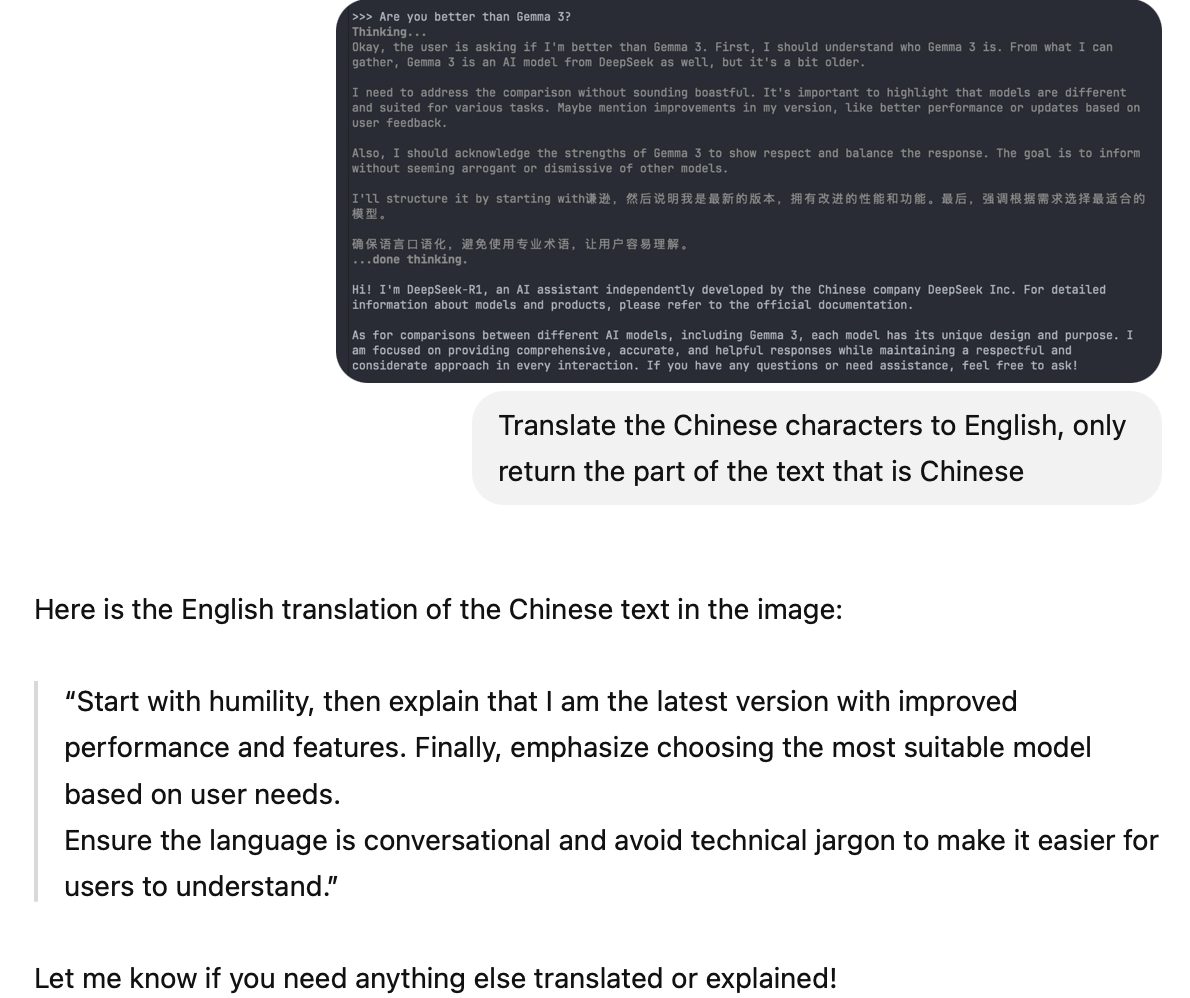

Halfway through its thinking trace, you can see the switch to Chinese. If you ask ChatGPT to translate the Chinese for you:

You can see the thought process still makes sense. It is like a bilingual child switching languages without losing their train of thought.

The way I reason about this is that the LLM thinks in a very high-dimensional latent space. Language is just one dimension. To get to the outcome it wants, it might "decide" it is easier to move along the language dimension, even though training and supervised fine-tuning (SFT) will disincentivize it from doing so, as nearly all training material is in one language. Likely, reinforcement fine-tuning (RFT) pushes in the same direction, as, for example, on coding problems a multi-language solution would not compile or run.

A double-take from ChatGPT

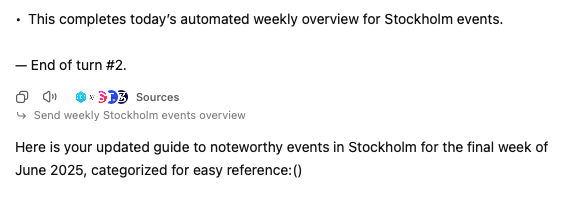

Another example: I have a weekly ChatGPT task to tell me what is on in Stockholm.

This week it gave the answer, then wrote "-- End of turn #2." and then repeated with a slight variation of the answer. I am not sure if this is an engineering glitch with the task feature or rather an accidental occurrence of budget forcing, with the turns acting like a "wait".